Might there possibly be benefits in generative AI solutions that hallucinate, make things up and show bias?

We live in a world of convenience; Once upon a time we had to do research in a library, going through card indexes and looking at the bibliography from one book to identify further reading, which would then necessitate hunting in the library for additional books, and then you would need to summarise everything you read into your piece of work. Then Google came along and we could do the search far faster, getting instant lists of articles or websites based on a search. We still needed to look at the content which our searches yielded, before identifying the best source information and then moulding this into our own final piece of work. Things had become more convenient which was good, but with this came some drawbacks. As users we tended to look at the first set of results returned, at the first page of search results rather than at subsequent pages meaning we lost some of the opportunities for accidental learning where, in a library, your search for one book might lead you to accidentally find other books which add to your learning. Also our searches were now being partially manipulated by algorithms as the search wasn’t just a simple search like that of a card index, it was a search which an algorithm used to predict what we might want, what is popular, etc, before yielding it as a search return. And these algorithms reduced the transparency of the searching process, potentially meaning our eventual work had been partially influenced by unknown algorithmic hands. Next we started the push for “voice-first” where rather than a list of search items our new voice assistant would boil down the answer to our requests to a single answer spoken with some artificial authority.

So roll in Generative AI and ChatGPT and Bard; Now we have a tool which will search for content but will also then attempt to synthesise this into a new piece of work. It doesn’t just find the sources it summarises, expands and explains. Further convenience combined with further challenges or risks. But what if there are benefits from some of these challenges such as the hallucinations and the bias? Is that possible?

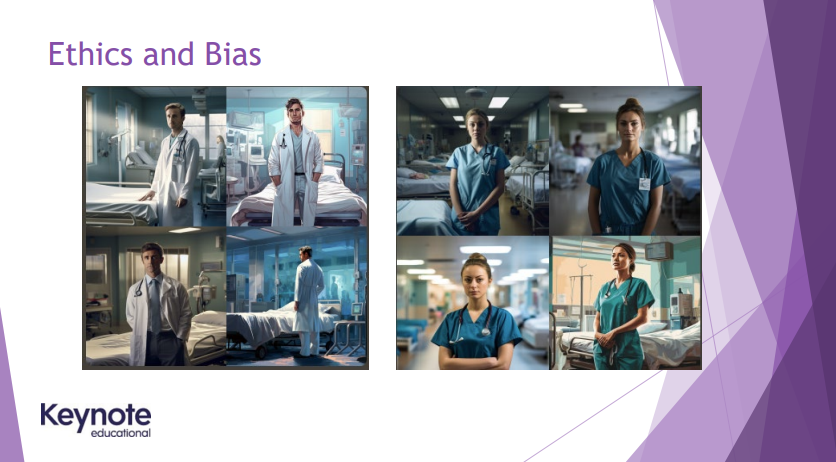

Lets step back to the library; My search was based on my decisions as to which books to select, with my reading and book selections then influencing the further reading I did. Now bias and error may have been in the books but I could focus on thinking about such bias and error, with error generally a low risk due to the editorial review processes associated with the publishing of a book. In the modern world however my information might come to me via social media platforms where an algorithm is at play in what I see, choosing what to surface and what not to. Additionally, content might be written by individuals or groups without the editorial process meaning a greater risk of error or bias. And with Generative AI now widely available we might find content awash with subtle bias or simply containing errors and misunderstanding presented confidently as face. As an individual trying to do some research I have more to think about than just about the content. I need to think more about who wrote the content, how it came to me, what the motivation of the writer was, whether generative AI may have been used, etc. In effect I need to be more critical than I might have been back in the library.

And maybe this is where the obvious hallucinations and bias is useful, as it highlights our need for criticality when dealing with generative AI content, but also with wider content available in this digital world such as the content which we are constantly bombarded with via social media. In a world of ever increasing content, increasing division between groups and nations and increasing individuals contributing either for positive or sometimes malicious reasons, being critical of content may now be the most important skill.

If it werent for these imperfections would we see the need to be critical, in a world where I suspect a critical view is all the more important? And can we humans claim to be without some imperfections? Could it therefore be that actually the issues or challenges of generative Ai, its hallucinations and bias, may be a desirable imperfection?